Strategies to center user needs for research tools

What does a UX Designer do?

The Childhood Cancer Data Lab builds resources guided by the most pressing needs of our primary users: pediatric cancer researchers. As the Data Lab's UX Designer, I conduct research activities with scientists like usability evaluations, semi-structured interviews, and card sorts to gain insight into their activities, processes, pain-points, and behaviors. I work with scientists and engineers at the Data Lab to use this information to improve existing products and services or to create new ones. You can read more about how we collaborate here.

In this post, we will discuss the strategies we use to center user needs during the conceptualization and development phases of our tools and how these activities might benefit your organization too!

Usability and Research Tools

We design research tools that have similarities to enterprise tools, which are notoriously difficult to design, implement, and use. They are expected to be generic platforms that cover a large number of use cases and scenarios and serve a variety of roles and skill sets. The person making the buying decision is not the end user. This means that features that appeal to decision makers will be prioritized over enhancements to improve end user experience. Enterprise tools are often expensive, and once an organization is invested, it is difficult to pivot. As a result, end users are stuck with a tool which, at best, only somewhat meets their needs. At worst, users are forced to invent workarounds to get their job done.

Many parallels can be drawn between enterprise softwares and research tools. Research tools need to serve a wide user base with varying skill levels. Choices are limited and researchers are often forced to use a tool that either does not match their skill level or does not offer flexibility in its use. This results in what I like to call, “effort leakage”: researchers’ primary efforts are spent trying to make an ill-fitting tool work, rather than utilizing the tool to further their research goals.

When time is spent developing a research tool without considering who will use the tool, research becomes less efficient and is slowed down. The cost of ill-fit tools is too high in the context of research where the lives of children depend on good tools.

Whether we are designing tools or implementing processes, the Data Lab values efficiency. Just take a look at our tips for automating analyses and saving time in your research environment for further proof! Next, we'll tell you how we try to bake efficiency into our tools by ensuring that they are a good fit for our community.

A step back, a shift in mindset

It is helpful to think about a tool as solving two types of problems: a technical problem and a human problem. Let’s use one of the Data Lab’s tools to demonstrate this concept. During the development of refine.bio, we spent a lot of time defining the problems we wanted to solve. The technical problem entailed obtaining massive amounts of data from various sources, and uniformly processing and harmonizing it. The human problem entailed identifying the best way to deliver all of this processed data to researchers in a way that it is ready-to-use.

As a rule, we include scientific, engineering, and design perspectives from the conceptual stages of a project. We define the problem and then outline solutions as a team. Sure, it takes a little time to get started, but we begin with a robust strategy and avoid nasty surprises as we start to implement solutions.

Here are some key things that helped ensure success:

1. We ask ourselves a series of questions that we adapted from Stephen Gate’s podcast The Crazy One at the beginning of every project. This helps us gain a clear idea of the problem we are trying to solve and who we are trying to solve it for. Visit this blog for a glimpse at those questions.

Below are a few of the questions that have been modified to better fit our context:

- What are we trying to change and why?

- What are some things that have been done in the past?

We added two more questions to help us focus on the problem and avoid scope creep:

- What is this tool for?

- What is it NOT for?

We do this as an activity where each of us writes down our responses to the questions above. Then we take some time to walk everyone through our responses and provide our rationale. Finally, we discuss and combine it to a single document.

2. We speak to the community! We interview potential users of the tools we are developing. We talk to researchers who work in a variety of roles to learn from different perspectives.

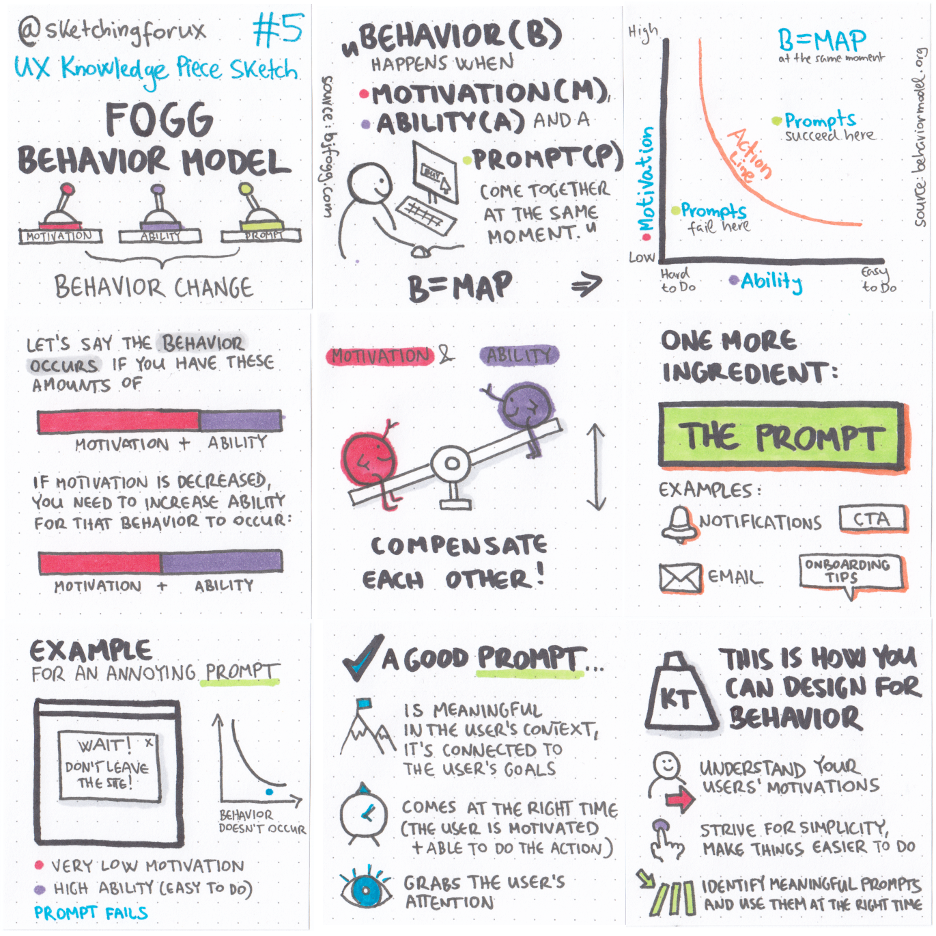

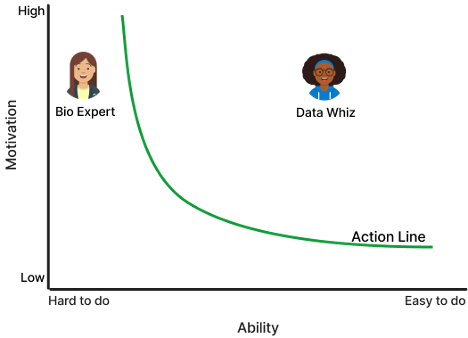

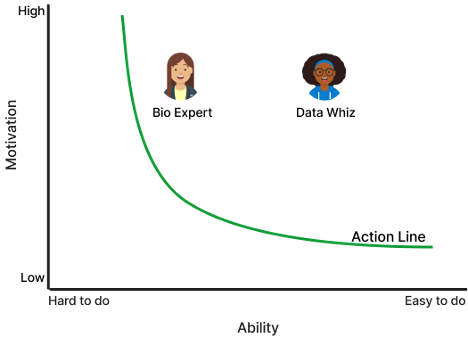

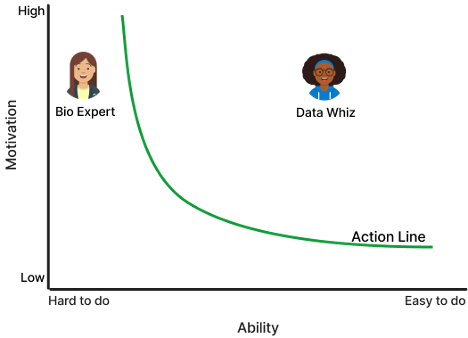

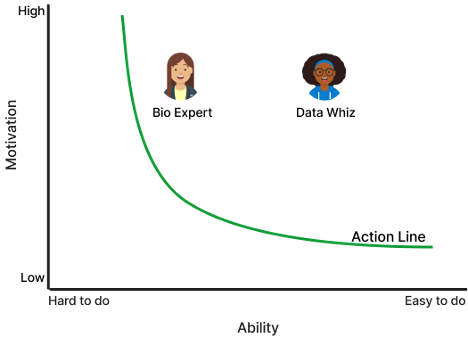

3. The Fogg Behavioral Model shows that “three elements must converge at the same moment for a behavior to occur: Motivation, Ability, and a Prompt. When a behavior does not occur, at least one of those three elements is missing.” We utilize this model to ensure that our tools are not too challenging to use.

User Personas

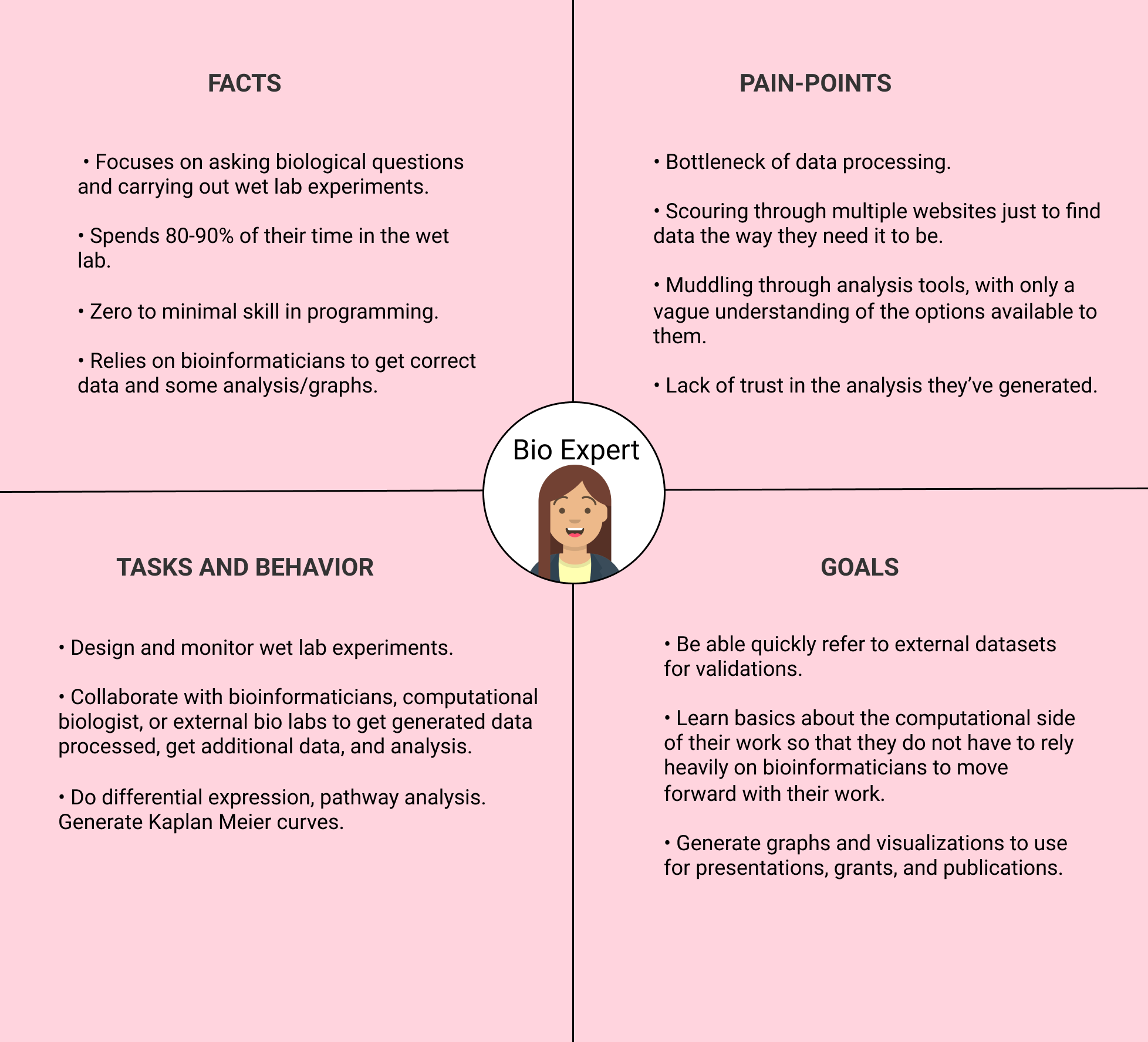

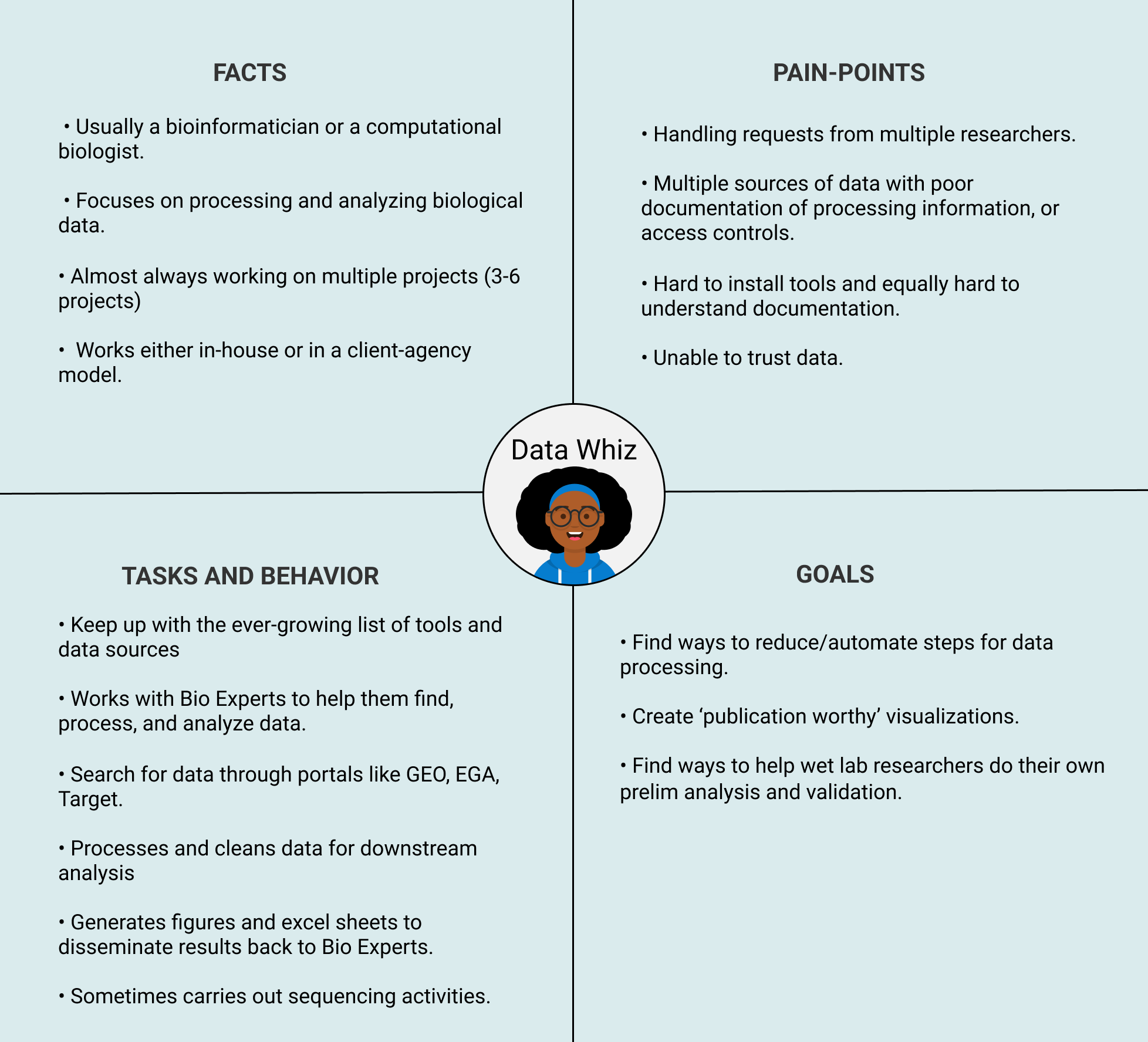

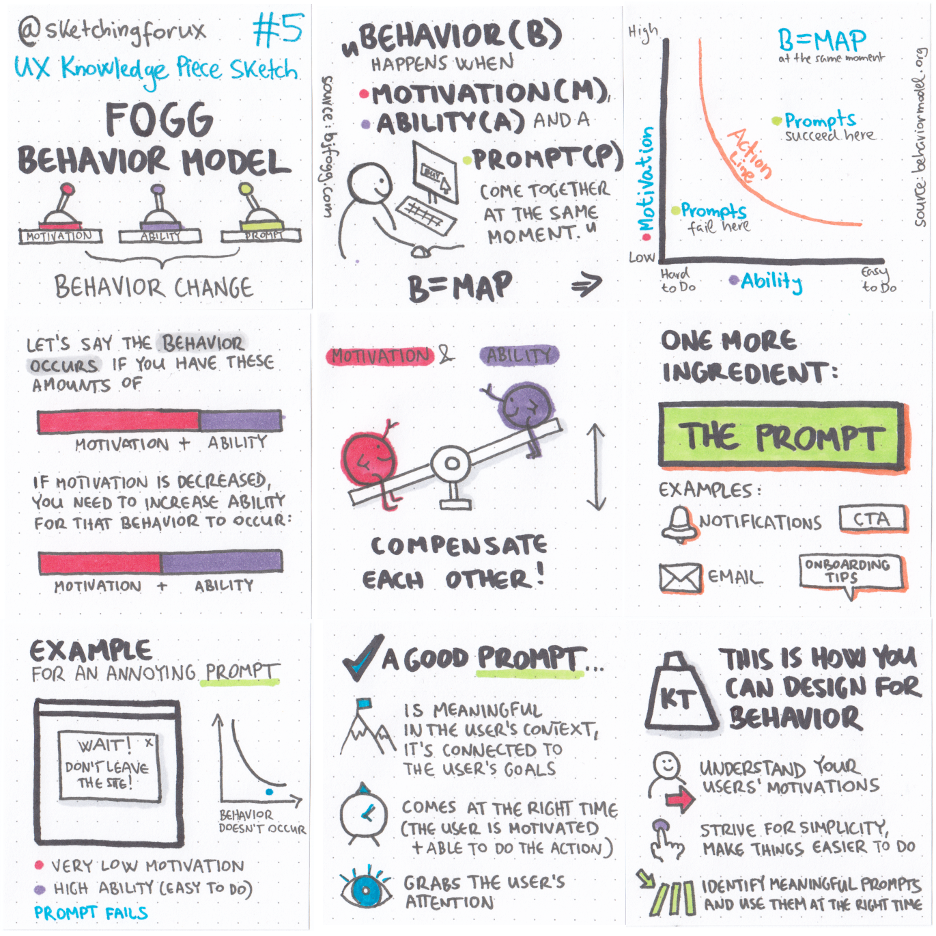

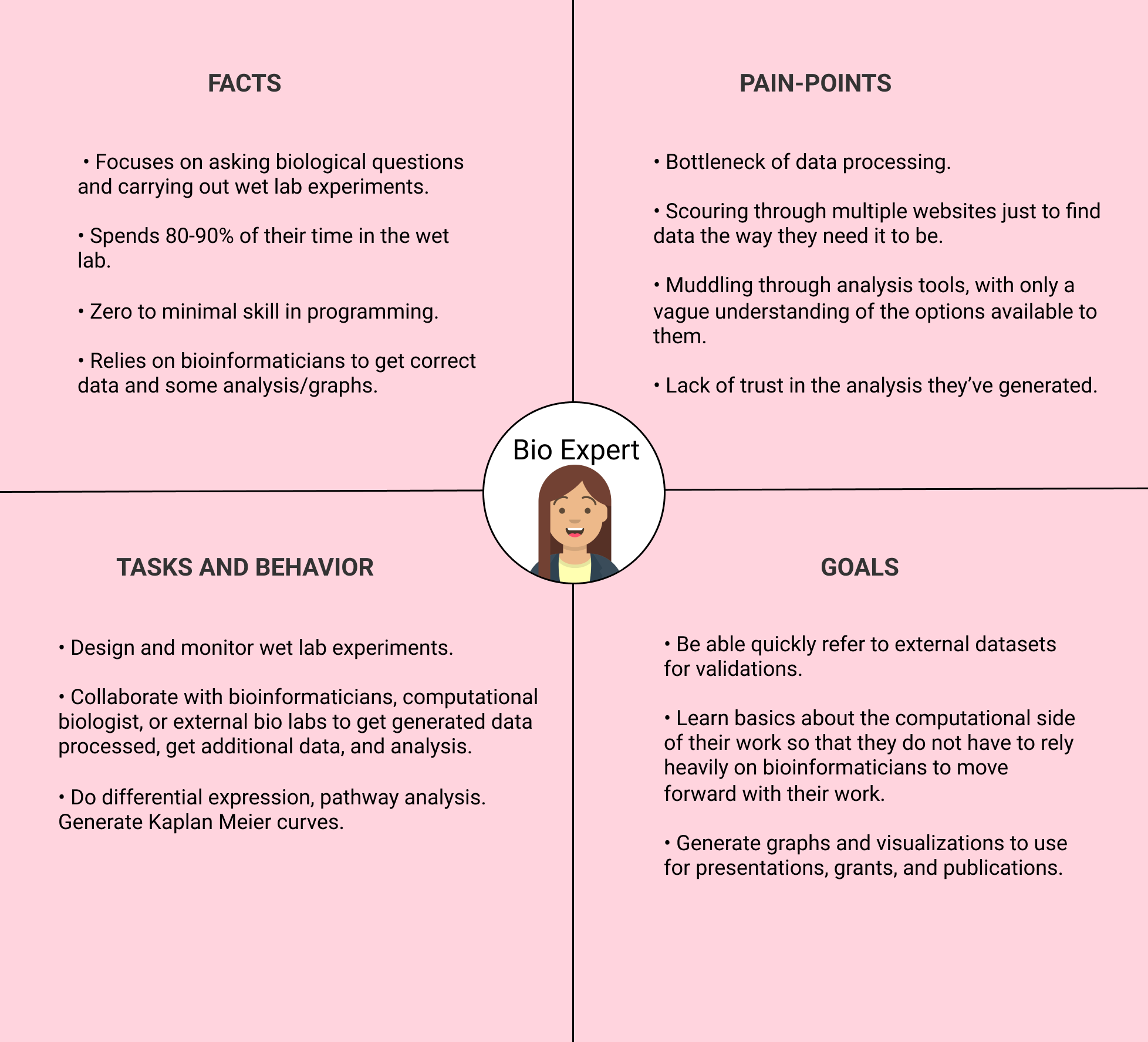

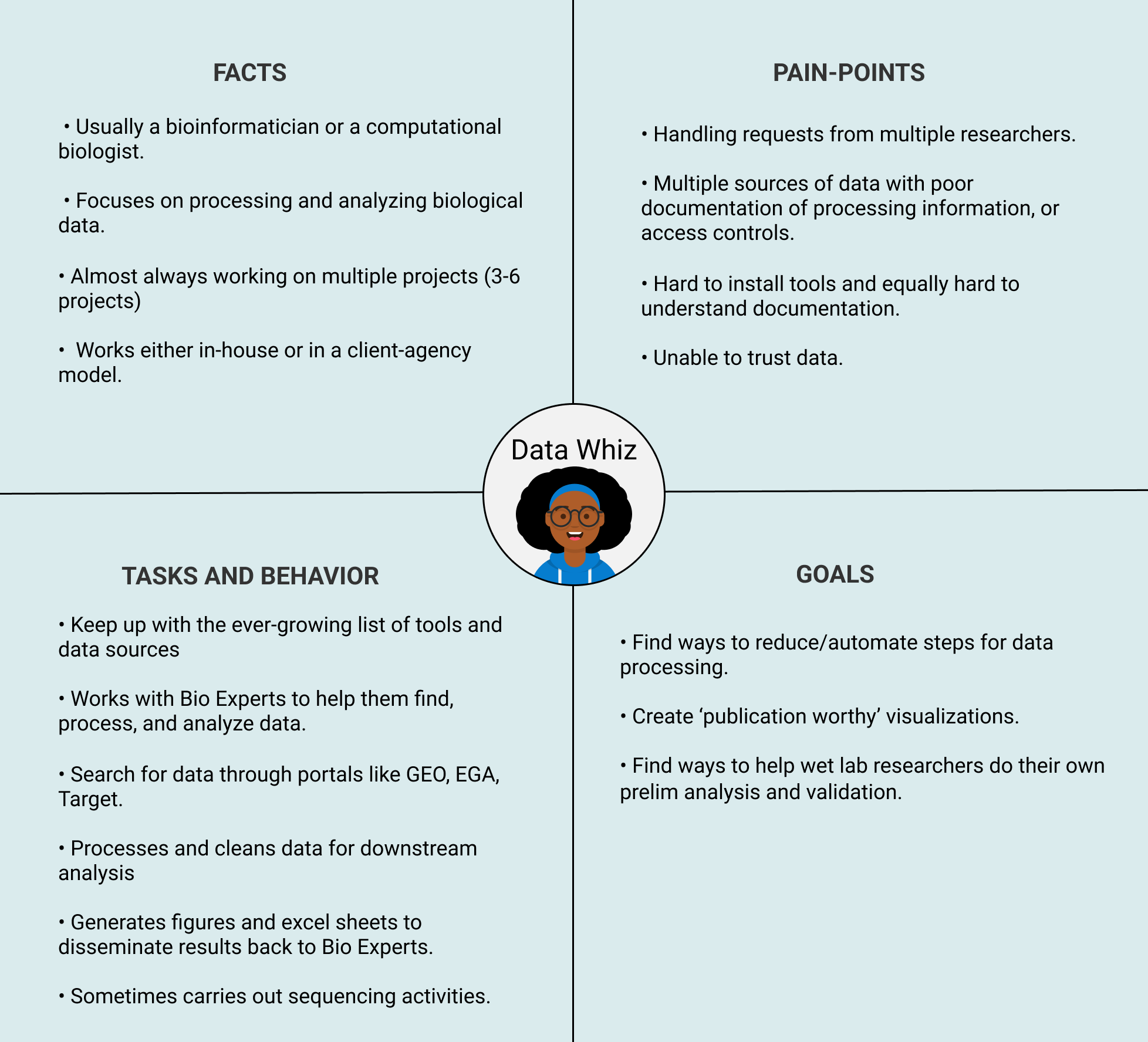

Based on the research we conducted for refine.bio, we defined two archetypes of users: Bio Experts and Data Whizzes. These are sometimes referred to as Personas. We used Lean Personas (also called proto-personas) to help us quickly identify the different needs of these different types of users. We have intentionally omitted demographics because our research indicated that it did not play a major role in our context.

Putting it all together

The ideal way refine.bio should behave for each of these user archetypes is different.

- For the Data Whizzes, providing an R or a Python client and documentation to get data would be sufficient for them to utilize the data downstream. Data Whizzes have the skills necessary to use standard data formats and conduct downstream analyses.

- For Bio Experts, their strength lies in biological domain knowledge. Even if they acquired that data via a web-based portal, they do not have sufficient technical skills to use it and get insights. Bio Experts need to expend a lot more effort to get value from refine.bio data than do Data Whizzes.

We introduced refine.bio examples as a way to help bridge the gap between acquiring data and getting initial results for Bio Experts. The examples are available as notebooks, which users can download and run locally, demonstrating the most common analyses like differential expression and pathway analysis. Users can easily swap out the example dataset with another refine.bio dataset.

At first, the examples were hosted on Github and required the user to either download or clone the repository before they could explore the example notebooks. We conducted a usability evaluation presenting Bio Experts with refine.bio examples and asked them to follow along with an analysis.

We uncovered several issues which made it difficult for Bio Experts to get started.

- Github was too challenging to navigate. It required learning an additional concept just to get started.

- There were many steps to take before Bio Experts could see the R notebook with the example and start to work on the core of their tasks.

- The instructions or commentary within the notebooks were not easy enough to follow.

We revamped refine.bio examples based on the results of the usability evaluations.

- We designed a website so that users no longer have to use Github to acquire the files. This also made it easier to locate different examples.

- We added sections to each of the R notebooks which walked users through setting up and also how to use it with a different refine.bio dataset.

- We rendered the notebooks in the browser so users can more easily explore them and discover what they offer.

- We introduced a more comprehensive Getting Started guide, along with links to R tutorials.

We conducted another round of usability evaluations to validate the changes. We learned that users found using refine.bio far less intimidating! They were able to download and follow along with the notebooks with less effort, and more importantly, less stress.

Little by little, one travels far

We have presented a neat and sanitized example, while the reality is that the problems tackled by the research community are complex and a single, all-encompassing solution is rarely the answer. It is easy to get overwhelmed and go down a rabbit hole of trying to do all things at once. It is important to remember creating tools is an iterative process. Everything doesn’t need to be solved now, and it doesn’t need to be perfect. It only needs to be usable.

Think of it as creating a multi-layered cake. Start with the base cake. Begin with the smallest problem with the highest impact that can be addressed. Then, add the next most important unit of work and so on. Finally you can address the problems that are nice-to-haves or, the icing on the cake.

This principle can also be applied to the strategies we’ve laid out above:

- Talk to at least 5 users. If you cannot speak with 5, talk to 1 user. If that isn’t possible, then talk to a coworker who isn’t involved with the project. Even a single conversation can provide useful perspectives and insights. Guerilla testing is a method to get some quick feedback from users.

- Do the activity with the teams you work with. If that isn’t possible, do it with your immediate team, and if that is also not possible, do it on your own.

- Apply the Fogg Model on smaller interactions, like structuring READMEs, writing docs, and even adding comments to code!

If you are in the business of creating tools for research and are interested in learning more about things like this, the good news is that our friends over in the tech and product world think about these concepts all the time.

Here are a couple of places to start:

Finally, if you can budget for it, hire software engineers, UX Researchers, and UX Designers, and more importantly, ensure that they are involved in the early stages of planning.

What does a UX Designer do?

The Childhood Cancer Data Lab builds resources guided by the most pressing needs of our primary users: pediatric cancer researchers. As the Data Lab's UX Designer, I conduct research activities with scientists like usability evaluations, semi-structured interviews, and card sorts to gain insight into their activities, processes, pain-points, and behaviors. I work with scientists and engineers at the Data Lab to use this information to improve existing products and services or to create new ones. You can read more about how we collaborate here.

In this post, we will discuss the strategies we use to center user needs during the conceptualization and development phases of our tools and how these activities might benefit your organization too!

Usability and Research Tools

We design research tools that have similarities to enterprise tools, which are notoriously difficult to design, implement, and use. They are expected to be generic platforms that cover a large number of use cases and scenarios and serve a variety of roles and skill sets. The person making the buying decision is not the end user. This means that features that appeal to decision makers will be prioritized over enhancements to improve end user experience. Enterprise tools are often expensive, and once an organization is invested, it is difficult to pivot. As a result, end users are stuck with a tool which, at best, only somewhat meets their needs. At worst, users are forced to invent workarounds to get their job done.

Many parallels can be drawn between enterprise softwares and research tools. Research tools need to serve a wide user base with varying skill levels. Choices are limited and researchers are often forced to use a tool that either does not match their skill level or does not offer flexibility in its use. This results in what I like to call, “effort leakage”: researchers’ primary efforts are spent trying to make an ill-fitting tool work, rather than utilizing the tool to further their research goals.

When time is spent developing a research tool without considering who will use the tool, research becomes less efficient and is slowed down. The cost of ill-fit tools is too high in the context of research where the lives of children depend on good tools.

Whether we are designing tools or implementing processes, the Data Lab values efficiency. Just take a look at our tips for automating analyses and saving time in your research environment for further proof! Next, we'll tell you how we try to bake efficiency into our tools by ensuring that they are a good fit for our community.

A step back, a shift in mindset

It is helpful to think about a tool as solving two types of problems: a technical problem and a human problem. Let’s use one of the Data Lab’s tools to demonstrate this concept. During the development of refine.bio, we spent a lot of time defining the problems we wanted to solve. The technical problem entailed obtaining massive amounts of data from various sources, and uniformly processing and harmonizing it. The human problem entailed identifying the best way to deliver all of this processed data to researchers in a way that it is ready-to-use.

As a rule, we include scientific, engineering, and design perspectives from the conceptual stages of a project. We define the problem and then outline solutions as a team. Sure, it takes a little time to get started, but we begin with a robust strategy and avoid nasty surprises as we start to implement solutions.

Here are some key things that helped ensure success:

1. We ask ourselves a series of questions that we adapted from Stephen Gate’s podcast The Crazy One at the beginning of every project. This helps us gain a clear idea of the problem we are trying to solve and who we are trying to solve it for. Visit this blog for a glimpse at those questions.

Below are a few of the questions that have been modified to better fit our context:

- What are we trying to change and why?

- What are some things that have been done in the past?

We added two more questions to help us focus on the problem and avoid scope creep:

- What is this tool for?

- What is it NOT for?

We do this as an activity where each of us writes down our responses to the questions above. Then we take some time to walk everyone through our responses and provide our rationale. Finally, we discuss and combine it to a single document.

2. We speak to the community! We interview potential users of the tools we are developing. We talk to researchers who work in a variety of roles to learn from different perspectives.

3. The Fogg Behavioral Model shows that “three elements must converge at the same moment for a behavior to occur: Motivation, Ability, and a Prompt. When a behavior does not occur, at least one of those three elements is missing.” We utilize this model to ensure that our tools are not too challenging to use.

User Personas

Based on the research we conducted for refine.bio, we defined two archetypes of users: Bio Experts and Data Whizzes. These are sometimes referred to as Personas. We used Lean Personas (also called proto-personas) to help us quickly identify the different needs of these different types of users. We have intentionally omitted demographics because our research indicated that it did not play a major role in our context.

Putting it all together

The ideal way refine.bio should behave for each of these user archetypes is different.

- For the Data Whizzes, providing an R or a Python client and documentation to get data would be sufficient for them to utilize the data downstream. Data Whizzes have the skills necessary to use standard data formats and conduct downstream analyses.

- For Bio Experts, their strength lies in biological domain knowledge. Even if they acquired that data via a web-based portal, they do not have sufficient technical skills to use it and get insights. Bio Experts need to expend a lot more effort to get value from refine.bio data than do Data Whizzes.

We introduced refine.bio examples as a way to help bridge the gap between acquiring data and getting initial results for Bio Experts. The examples are available as notebooks, which users can download and run locally, demonstrating the most common analyses like differential expression and pathway analysis. Users can easily swap out the example dataset with another refine.bio dataset.

At first, the examples were hosted on Github and required the user to either download or clone the repository before they could explore the example notebooks. We conducted a usability evaluation presenting Bio Experts with refine.bio examples and asked them to follow along with an analysis.

We uncovered several issues which made it difficult for Bio Experts to get started.

- Github was too challenging to navigate. It required learning an additional concept just to get started.

- There were many steps to take before Bio Experts could see the R notebook with the example and start to work on the core of their tasks.

- The instructions or commentary within the notebooks were not easy enough to follow.

We revamped refine.bio examples based on the results of the usability evaluations.

- We designed a website so that users no longer have to use Github to acquire the files. This also made it easier to locate different examples.

- We added sections to each of the R notebooks which walked users through setting up and also how to use it with a different refine.bio dataset.

- We rendered the notebooks in the browser so users can more easily explore them and discover what they offer.

- We introduced a more comprehensive Getting Started guide, along with links to R tutorials.

We conducted another round of usability evaluations to validate the changes. We learned that users found using refine.bio far less intimidating! They were able to download and follow along with the notebooks with less effort, and more importantly, less stress.

Little by little, one travels far

We have presented a neat and sanitized example, while the reality is that the problems tackled by the research community are complex and a single, all-encompassing solution is rarely the answer. It is easy to get overwhelmed and go down a rabbit hole of trying to do all things at once. It is important to remember creating tools is an iterative process. Everything doesn’t need to be solved now, and it doesn’t need to be perfect. It only needs to be usable.

Think of it as creating a multi-layered cake. Start with the base cake. Begin with the smallest problem with the highest impact that can be addressed. Then, add the next most important unit of work and so on. Finally you can address the problems that are nice-to-haves or, the icing on the cake.

This principle can also be applied to the strategies we’ve laid out above:

- Talk to at least 5 users. If you cannot speak with 5, talk to 1 user. If that isn’t possible, then talk to a coworker who isn’t involved with the project. Even a single conversation can provide useful perspectives and insights. Guerilla testing is a method to get some quick feedback from users.

- Do the activity with the teams you work with. If that isn’t possible, do it with your immediate team, and if that is also not possible, do it on your own.

- Apply the Fogg Model on smaller interactions, like structuring READMEs, writing docs, and even adding comments to code!

If you are in the business of creating tools for research and are interested in learning more about things like this, the good news is that our friends over in the tech and product world think about these concepts all the time.

Here are a couple of places to start:

Finally, if you can budget for it, hire software engineers, UX Researchers, and UX Designers, and more importantly, ensure that they are involved in the early stages of planning.